Achieving Peak INT4 Quantization Performance Hardware Needs

Achieving Peak INT4 Quantization Performance Hardware Needs - Identifying the critical processor types for INT4 workloads

To genuinely unlock the performance increases offered by INT4 quantization for transformer workloads, understanding which processor types are critical is fundamental. While halving the bit depth from INT8 holds the theoretical promise of substantially higher peak computation, realizing this on real hardware presents specific challenges. Processors demonstrating key advantages in handling INT4 are typically those equipped with specialized compute units, such as matrix cores, that are explicitly optimized for low-bit arithmetic. The ability to efficiently perform the massive number of multiply-accumulate operations required by large models at 4-bit precision, while managing the inherent quantization noise and maintaining reasonable model accuracy, is not a universal trait across all hardware. Consequently, the focus sharpens on processor architectures where INT4 implementation is robustly supported at the silicon level and complemented by effective software tools, differentiating those that merely claim INT4 capability from those that can truly deliver sustained, high-performance inference at this reduced precision.

Delving into the specifics of hardware implementation, several characteristics stand out when trying to discern which processor architectures are truly critical for pushing the boundaries of INT4 performance.

It seems many contemporary processor designs, particularly those aimed at acceleration tasks or constrained environments, incorporate specialized computation blocks, sometimes labeled Neural Processing Units (NPUs) or similar names. These units often appear architecturally geared towards efficiently handling the very low-bit integer arithmetic fundamental to INT4 inference. This specialization suggests a potential for energy efficiency profiles that might be hard to replicate on more general-purpose CPUs or GPUs performing the *exact same* low-precision calculations.

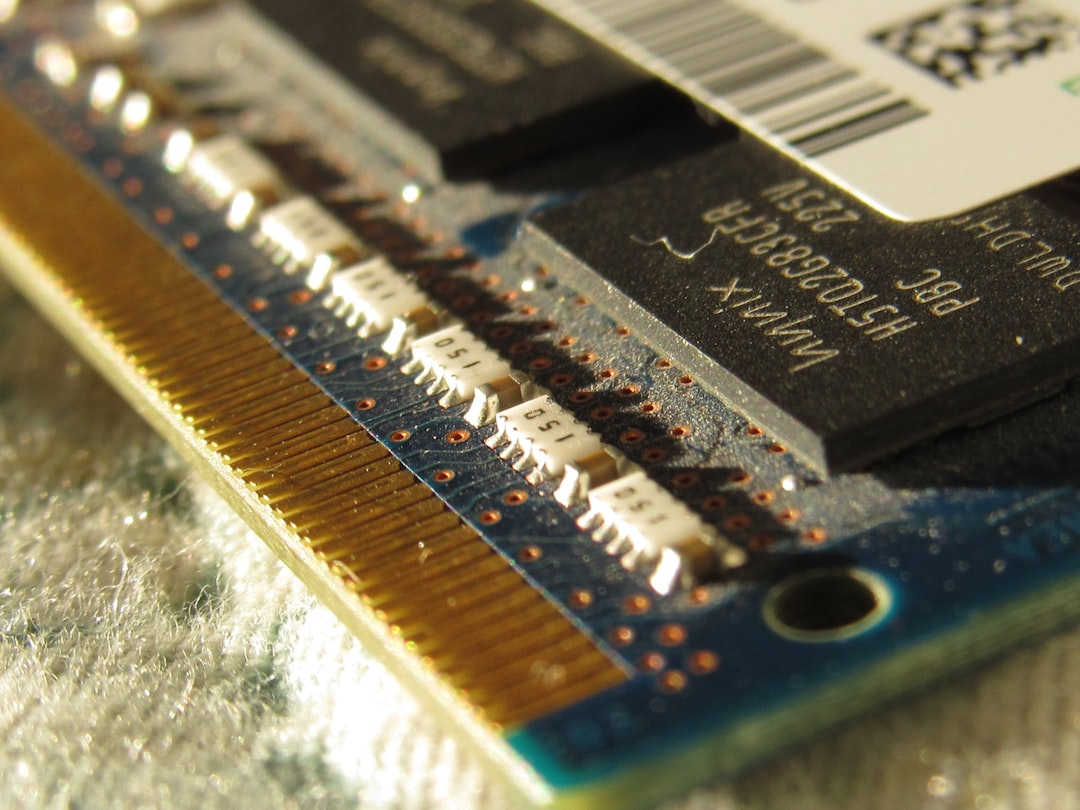

Furthermore, achieving peak INT4 throughput often hinges significantly on the internal memory hierarchy. Processors that excel in this domain appear to have substantial, high-bandwidth on-die cache structures meticulously designed to keep localized data readily accessible. When each operation consumes such a minimal amount of data (just 4 bits per weight/activation), the latency incurred by accessing slower external memory becomes a proportionally much larger performance bottleneck compared to higher precision formats. Minimizing these off-chip accesses is paramount.

Looking deeper, the effective, sustained throughput achievable on a given processor isn't just about the theoretical number of INT4 multiply-accumulate operations per clock cycle. A critical factor seems to be the efficiency and capacity of the data paths connecting memory interfaces to the INT4 compute units and then moving intermediate results. Architectures optimized for a high, continuous *flow* of data appear to achieve better sustained utilization than those with high peak compute but bottlenecks in their internal data transport fabric.

Perhaps one of the most tangible, and sometimes frustrating, observations is the dependency on the software ecosystem. The theoretical INT4 capability baked into the silicon frequently requires highly optimized, hardware-vendor-specific software libraries and compiler toolchains to be fully unlocked in the real world. Without this crucial software layer, carefully tuned to the specific micro-architecture and internal data flows, the raw hardware potential often remains just that – potential. This reliance raises questions about flexibility and potential vendor lock-in.

Finally, some processor designs appear to integrate smaller, dedicated hardware units specifically tasked with accelerating certain supporting operations that are common in INT4 pipelines. Tasks like applying zero-point adjustments, performing necessary scaling operations after multiplication, or handling quantization details are offloaded from the main high-throughput INT4 compute blocks. This frees up the core arithmetic units to stay busy with the intensive matrix multiplication, contributing to improved overall pipeline efficiency and higher sustained performance.

Achieving Peak INT4 Quantization Performance Hardware Needs - Why advertised INT4 throughput remains elusive on some platforms

Despite INT4 quantization holding the clear theoretical promise of doubling peak computational throughput, recent work continues to highlight the persistent challenge in translating this potential into actual, sustained performance on various hardware platforms. While initial studies confirm the feasibility for tasks like language model inference without significant accuracy loss, achieving the full latency reduction suggested by the theoretical peak remains an active area of research and engineering focus, indicating the gap between advertised capability and real-world execution is still being addressed.

One frequently finds that the headline INT4 performance figures don't fully materialize on many platforms for practical workloads. Several architectural and implementation details seem to persistently limit reaching those theoretical peaks.

First, getting the 4-bit data precisely where it needs to go within the processor seems harder than the raw arithmetic. The necessary internal data movement logic – packing and unpacking these small values into wider transport buses or registers – isn't always capable of keeping the dedicated computation units continuously fed without introducing stalls or reducing the effective data bandwidth leading into the arithmetic core.

Moreover, the compiler's ability to effectively map the diverse operations within a neural network – which involve more than just matrix multiplies – onto the specific and sometimes peculiar low-level instructions available for INT4 execution often appears suboptimal. This can lead to inefficient instruction scheduling and resource underutilization when running complete, complex models compared to isolated kernels.

A critical, often overlooked, bottleneck seems to be the synchronization and data handoff between the specialized INT4 matrix multiplication units and other parts of the processor pipeline handling auxiliary operations like applying biases or activation functions. Even if the matrix unit is blazingly fast, mismatches in throughput or latency with these dependent stages can force the high-speed engine to wait, limiting the overall sustained rate for a given layer.

Even with large on-die caches, the fragmented nature of memory access patterns in some model architectures combined with the very small size of INT4 elements can result in poor cache line utilization. A single cache miss for 4-bit data can be disproportionately disruptive, and complex access patterns can prevent caches from effectively amortizing memory latencies, reducing the sustainable rate at which data can be delivered to the compute units.

Finally, the inconvenient truth is that typical transformer models aren't composed *entirely* of operations perfectly suited for the dedicated INT4 matrix hardware. Layers or operations that must execute on scalar units, different precision hardware, or specialized functional blocks require costly transitions between execution contexts. These diversions from the peak INT4 pathway significantly reduce the *average* throughput observed over the course of an entire model inference, which is what truly matters for latency.

Achieving Peak INT4 Quantization Performance Hardware Needs - Performance disparities across different hardware vendor ecosystems

Looking across the range of hardware options from various providers, it becomes clear that performance consistency for INT4 quantization workloads is not a given. There are significant disparities. Each vendor's particular approach to silicon design and the ecosystem of software they build around it results in distinct levels of efficiency when executing INT4 operations. Key differentiating factors often include the architecture's internal movement of tiny data elements, the degree to which software is tightly coupled and optimized for the specific hardware, and how effectively memory bandwidth and latency are managed for low-bit data patterns. These technical variances directly impact how closely a system can approach its theoretical INT4 performance ceiling compared to alternatives. Moreover, the trend of vendors developing highly tailored optimizations to gain an edge tends to amplify these differences, potentially making it difficult or inefficient to port workloads between ecosystems. For those focused on extracting maximum INT4 performance, navigating these vendor-specific landscapes and their resulting performance variations is essential.

Here are some less obvious observations regarding how performance actually shakes out across different hardware vendor offerings when dealing with INT4 quantization:

1. Beyond the raw count of INT4 compute units, the precise way vendors structure the internal pipelines and interconnects within these blocks seems to lead to subtle but significant variations. This can mean that while two platforms might theoretically offer the same peak MACs, their ability to sustain high utilization across different model shapes or even achieve competitive power efficiency for the same workload can differ unexpectedly, sometimes favoring less obvious architectural choices.

2. The depth and maturity of the vendor's software stack, particularly the parts responsible for scheduling and fusing operations *before* they hit the INT4 hardware, introduces substantial variance. Some vendor toolchains excel at identifying complex graph patterns and mapping them efficiently, including non-matmul operations adjacent to INT4 blocks, while others appear more limited, resulting in fragmented execution that leaves theoretical compute cycles idle during transitions.

3. Achieving peak performance isn't just about the compute engine; it's critically dependent on how well data *flows* to and from it. The design and capacity of the internal memory fabrics, the on-die networks connecting compute units to caches and memory controllers, are often proprietary and vary significantly between vendors. Weaknesses in this internal plumbing can become the effective bottleneck, regardless of the theoretical compute rate, leading to situations where a platform struggles to keep its powerful engines fed.

4. A significant portion of the performance often comes down to opaque, low-level details orchestrated by vendor-specific microcode or firmware running beneath the standard instruction set. The sophistication of this hidden layer, how effectively it manages parallel execution, handles dependencies, and pipelines operations can vary wildly, resulting in performance discrepancies that are hard to predict based on spec sheets alone.

5. Finally, the level of direct hardware assistance for operations essential to practical INT4 inference, such as the rapid calculation and application of dynamic per-tensor or per-group quantization parameters, is not uniform. Platforms that offer efficient, hardware-accelerated pathways for these supporting tasks avoid offloading them to slower scalar units or requiring software workarounds, creating a notable performance gap when deploying models that rely on these techniques for accuracy.

Achieving Peak INT4 Quantization Performance Hardware Needs - The necessary software support for effective hardware utilization

Achieving true efficiency from hardware designed for INT4 requires more than just having the silicon capabilities; robust software layers are fundamental to making that hardware work effectively. Simply possessing the physical ability to perform low-bit calculations isn't sufficient if the software cannot properly orchestrate the intricate flow of data, schedule computations efficiently on the specific chip architecture, and manage the processing pipeline without introducing delays. Without highly tuned software finely matched to the hardware's nuances, the raw processing power often remains partially utilized, never reaching its intended potential. A notable challenge is the ongoing reliance on often proprietary vendor-specific toolchains and libraries. While this tight coupling can sometimes be essential for squeezing out maximum performance from a particular piece of silicon, it raises legitimate concerns regarding vendor lock-in and complicates the deployment of solutions across varied hardware from different providers. Successfully navigating this crucial software-hardware interface is absolutely necessary to genuinely unlock peak INT4 capabilities instead of settling for impressive but unrealized theoretical specifications.

Unlocking the performance promises of INT4 hardware turns out to be heavily dependent on the quality of the software sitting layered above the silicon. It's not just about having the specialized matrix units; the execution engine driving them matters immensely.

* Frankly, merely possessing high-throughput INT4 calculation blocks on a chip means little if the software libraries aren't smart enough to continually shovel data into them. Keeping those hungry compute units busy necessitates intricate data scheduling, prefetching, and precise memory choreography at the software level to avoid precious cycles wasted waiting on data fetches.

* Optimizing compilers play a surprisingly large role, though sometimes frustratingly opaque. Their ability to effectively 'tile' large, complex tensor operations – breaking them into smaller chunks that fit neatly into limited on-die caches and register files – directly dictates how efficiently the hardware's INT4 units can be utilized without excessive off-chip memory traffic.

* Transitions between computational phases using different data formats or precisions (like INT4 matrix multiplies followed by FP32 bias adds or activation functions) introduce significant overhead. The software's finesse in managing the necessary format conversions, data packing/unpacking, and reordering during these handoffs is critical; clumsy handling here can choke the entire pipeline and crater sustained performance.

* For the dynamic quantization schemes often needed to maintain accuracy with INT4, the speed and efficiency of the software routines calculating and applying per-tensor or per-group scales and zero-points are paramount. If these aren't highly optimized and tightly integrated, they become a bottleneck, creating bubbles in the processing pipeline and negating hardware speedups.

* Ultimately, getting peak performance from complex hardware involves effectively coordinating work across numerous parallel execution units. The runtime software layer is responsible for orchestrating this, balancing the load, minimizing synchronization costs, and managing data flow between different parts of the chip. Its effectiveness (or lack thereof) fundamentally determines how much of the theoretical aggregate throughput is actually achievable.

Achieving Peak INT4 Quantization Performance Hardware Needs - Evaluating hardware readiness for emerging 4-bit formats

As 4-bit quantization methods evolve, moving beyond standard INT4 towards variants like NF4 or FP4, assessing whether current hardware is genuinely ready for these emerging formats is paramount. While the theoretical upsides for efficiency and memory are clear, translating these into consistent, real-world performance necessitates hardware architectures capable of more than just basic 4-bit multiplication. It requires a deep compatibility with the unique computational patterns and data handling specific to these newer, potentially more complex 4-bit schemes. Evaluating this readiness involves looking beyond peak theoretical numbers to understand how gracefully processors handle the practical demands – the subtle variations in representation and the computational nuances these emerging formats introduce – and how effectively the necessary software infrastructure can leverage them.

Here are some aspects regarding the state of hardware readiness for these aggressive 4-bit formats that continue to surface, sometimes unexpectedly:

The sheer density of computation promised by INT4, while theoretically powerful, appears in some cases to push hardware designs right up against thermal limitations. We observe that while the compute units themselves can crank out operations rapidly, the resulting localized heat generation can necessitate dynamic throttling mechanisms. This means the achievable sustained performance, the rate you can actually run inference for a significant duration, is often capped by cooling solutions or power envelopes, sitting noticeably below the peak throughput advertised in ideal scenarios.

It's become clear that achieving truly portable INT4 inference is hampered by a lack of standardization. The specifics of how quantization parameters (like scales and zero-points) are applied, or even how intermediate values are rounded in the low-level hardware, isn't uniformly defined or implemented across different vendors. Consequently, taking the exact same quantized model artifact and running it on two different platforms can yield slightly different numerical outputs, which makes robust validation, debugging, and guaranteeing consistent behavior a real challenge.

We've seen instances where reaching the promised INT4 throughput is surprisingly sensitive to the mundane details of the input tensor shapes. If the dimensions of matrices involved in calculations don't perfectly align with a specific hardware architecture's internal tile sizes or preferred processing blocks, performance can sometimes drop sharply. This suggests inefficiencies or less-optimized fallback paths in handling shapes that aren't perfectly 'sweet spots,' perhaps requiring awkward padding or less parallel execution.

Running at the incredibly high clock rates and throughput targets associated with peak INT4 means even minute overheads become significant bottlenecks. The time taken for on-chip synchronization between different processing blocks, or the cycles lost transitioning execution context between the dedicated INT4 matrix unit and a standard scalar or FP32 unit for a simple bias addition or activation, accumulates disproportionately. These transition costs, often small in absolute terms, eat away at the potential sustained rate when executing a full neural network layer.

The practical challenge of validating numerical correctness when porting INT4 workloads is significant. Achieving a high degree of numerical equivalence, perhaps 'bit-for-bit' reproducibility or matching results within a very tight tolerance, between different hardware platforms is complicated by those subtle differences in how low-level arithmetic and rounding are handled. This requires considerable effort in vendor-specific testing and characterization, adding a layer of complexity beyond just verifying raw speed figures.

More Posts from specswriter.com:

- →Core Components of a Technical Specification Breaking Down the TS Document Structure

- →7 Critical Components That Differentiate Solicited vs Unsolicited Project Proposals in 2024

- →7 Key Elements for Crafting Compelling Project Proposals in 2024

- →7 Creative Techniques for Translating Data into Compelling Stories

- →Product Copy Investment Understanding the Optimal Development Timeline in Technical Documentation

- →7 Essential Elements of Effective Technical Writing Samples in 2024